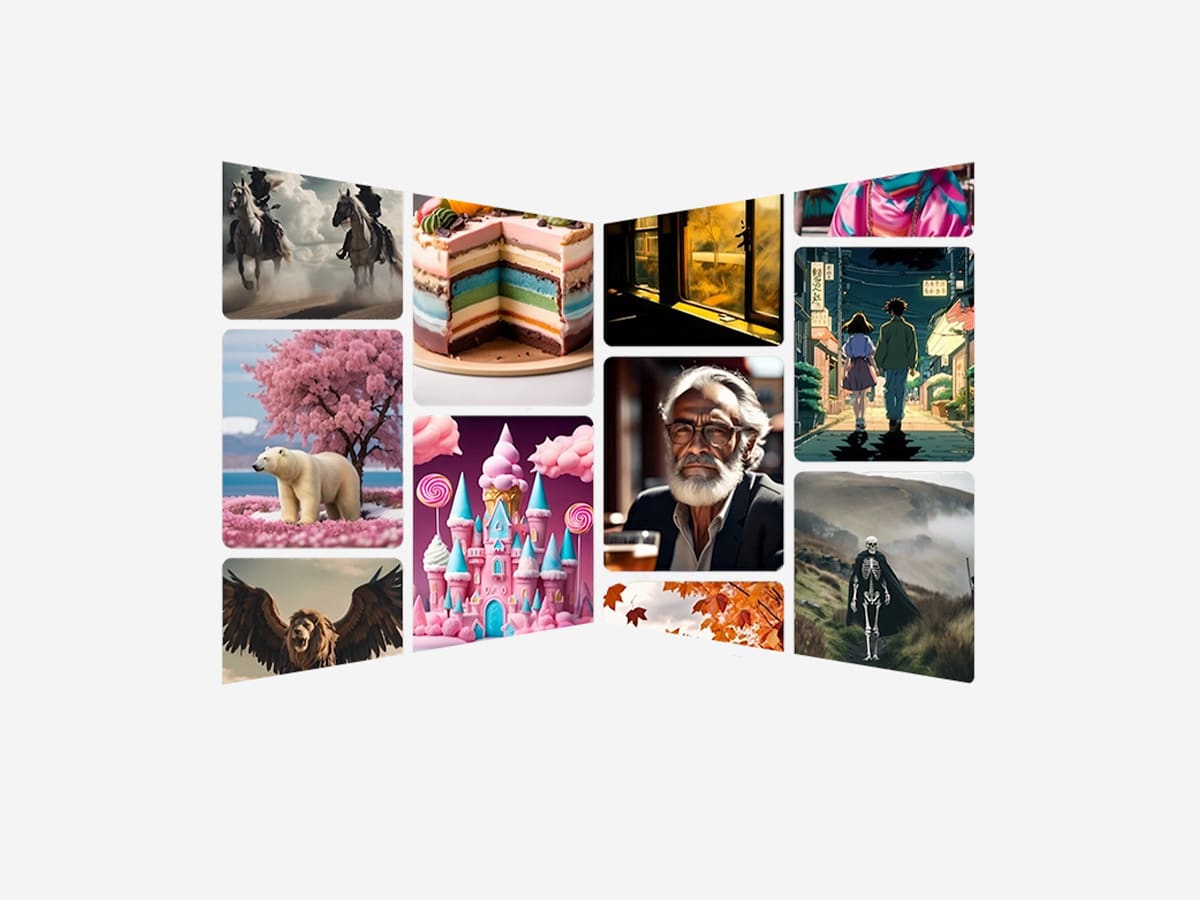

For many, AI peaked with the advent of user-prompted image generation. The ability to bring Terry Crews ‘ Snow White to life or transform Joe Biden into a Muppet represented a categoric shift in the way everyday people interacted with the technology, however, Stability AI, the company behind the groundbreaking Stable Diffusion model, may have now surpassed those pivotal moments.

The technology company has introduced Stable Video Diffusion, a brand-new AI model capable of animating existing images to create captivating videos. This new model builds upon the company’s existing Stable Diffusion text-to-image model and stands out as one of the few open-source video-generating models.

According to Stability AI, the Stable Video Diffusion represents a “latent video diffusion model for high-resolution, state-of-the-art text-to-video and image-to-video generation”. These latent diffusion models, initially “trained for 2D image synthesis have been turned into generative video models by inserting temporal layers and finetuning them on small, high-quality video datasets.”

Stable Video Diffusion has two models on offer—SVD and SVD-XT. SVD turns still images into 576×1024 videos with 14 frames, while SVD-XT, with the same architecture, bumps it to 24 frames. Both SVD and SVD-XT can create videos at speeds ranging from 3 to 30 frames per second. They can also perform multi-view synthesis from a single frame and are fine-tuned using multi-view datasets.

RELATED: Elon Musk’s New AI Chatbot ‘Grok’ is No Joke

“At the time of release in their foundational form, through external evaluation, we have found these models surpass the leading closed models in user preference studies,” the company said. A whitepaper that came out with Stable Video Diffusion (SVD) reveals that both SVD and SVD-XT underwent initial training on a dataset comprising millions of videos. The subsequent ‘fine-tuning’ process involved a more condensed set, ranging from hundreds of thousands to around a million clips. Now, here’s where it gets interesting (and a bit mysterious).

The paper does not explicitly state the source of these videos, hinting at the possibility that many might have been drawn from public research datasets. So, it still remains pretty unclear whether any of the videos used in the training process are subject to copyright, a matter that has been a significant point of debate in the context of text-to-image generating models. Despite its advanced (and unique) capabilities, Stable Video Diffusion has its own set of inherent limitations and is not quite “there” yet.

Notably, on the models’ Hugging Face pages, where researchers can apply to access Stable Video Diffusion, it is explicitly stated that the models cannot generate videos without motion or slow camera pans, be controlled by text, render text legibly (at least not consistently), or consistently generate faces and people “properly.”

Featured Video from Man of Many

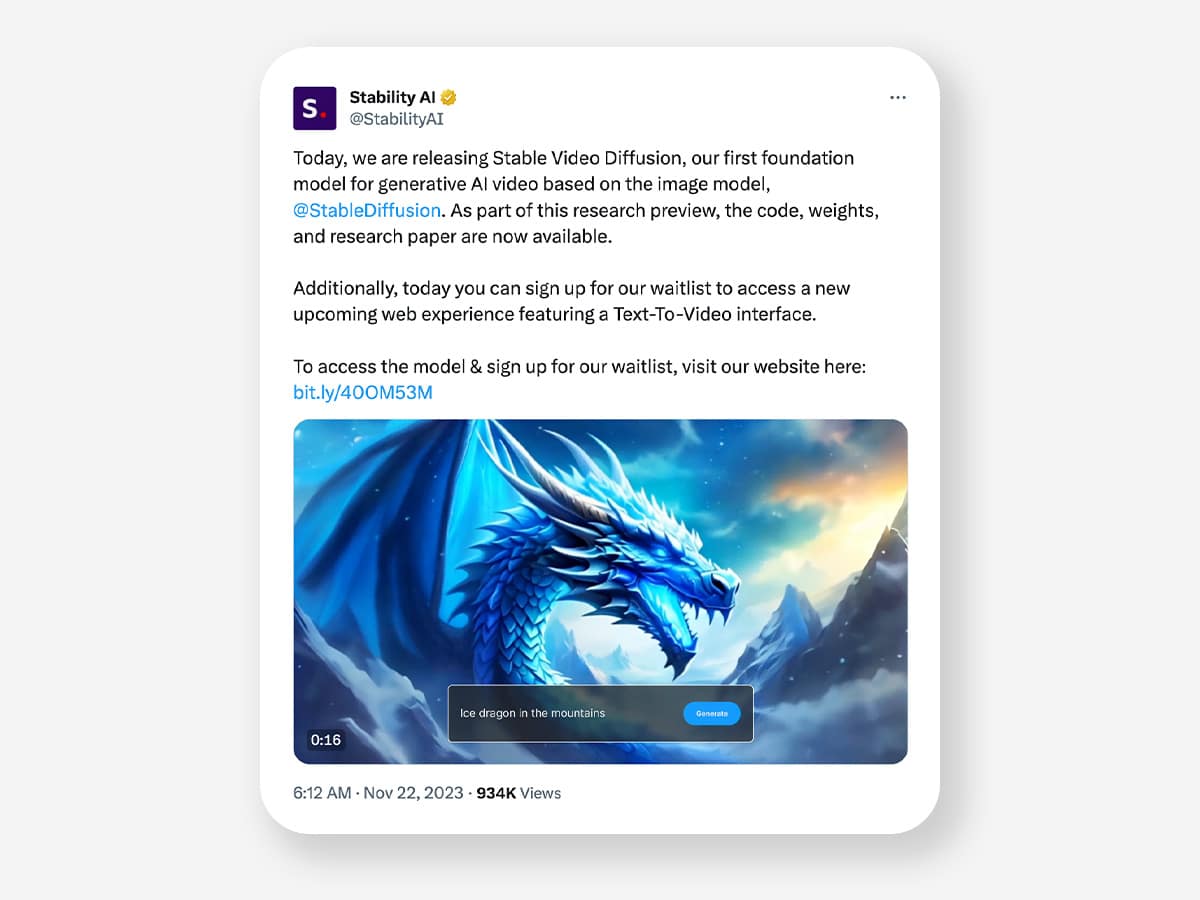

However, Stability AI has some big dreams for Stable Video Diffusion, and they’re not looking to hit the brakes anytime soon. The company is in the process of developing “a variety” of models that will both “build on and extend” the capabilities of SVD and SVD-XT. Additionally, a “text-to-video” tool is also in the works to introduce text prompting capabilities to the models accessible on the web.

At the moment, Stable Video Diffusion is exclusively available for research purposes only and is not yet open for real-world or commercial use. Interested users can register to join a waitlist to get an opportunity to access the “upcoming web experience featuring a text-to-video interface.”

Featured Video from Man of Many